AutoCodeGen

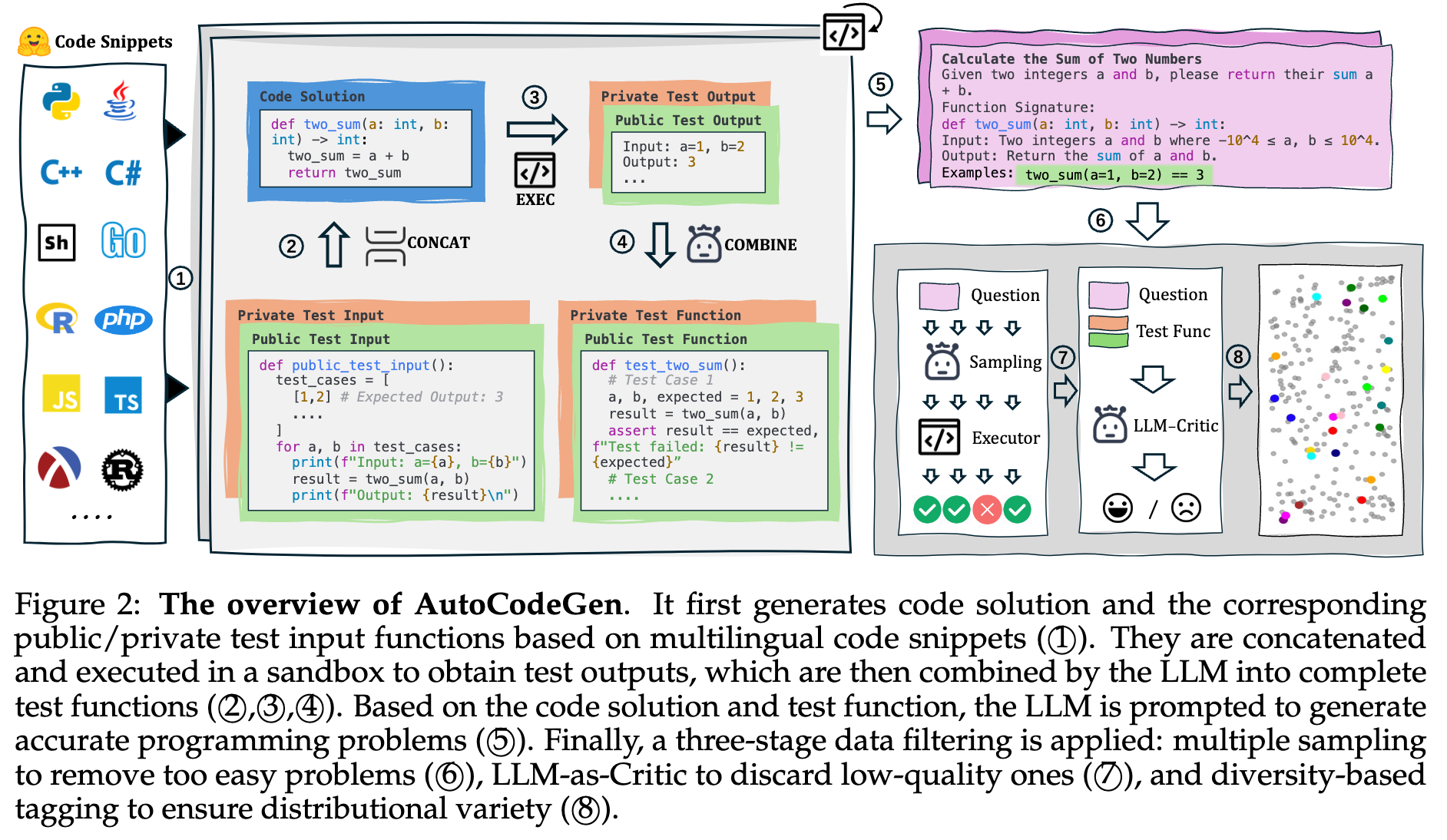

The core innovation of AutoCodeGen lies in having LLMs generate test inputs, execute them in a sandbox to obtain test outputs, and generate programming problems in reverse. This approach is more efficient, scalable, and ensures better test case coverage compared to existing methods like KodCode and CodeI/O.

AutoCodeBench

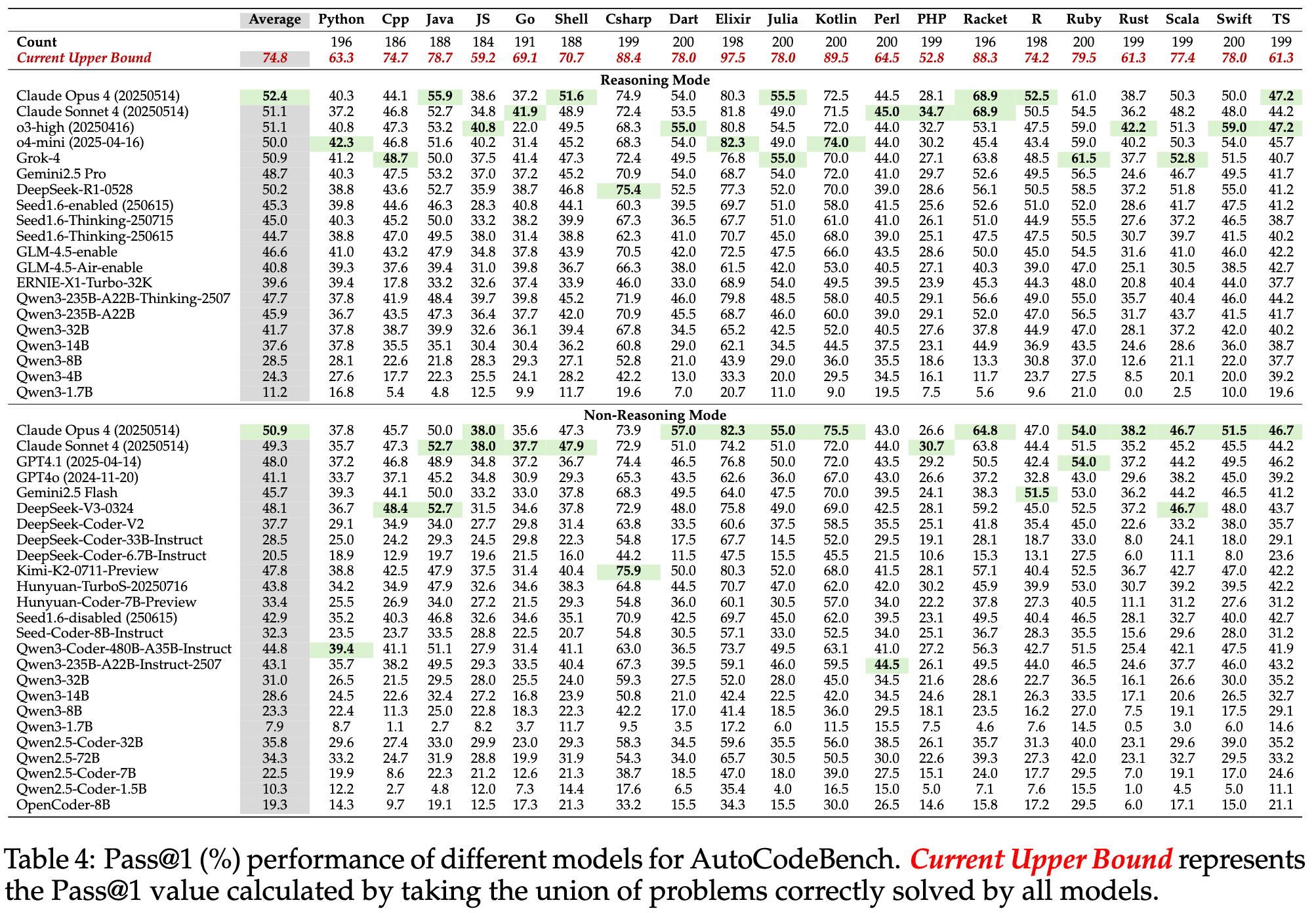

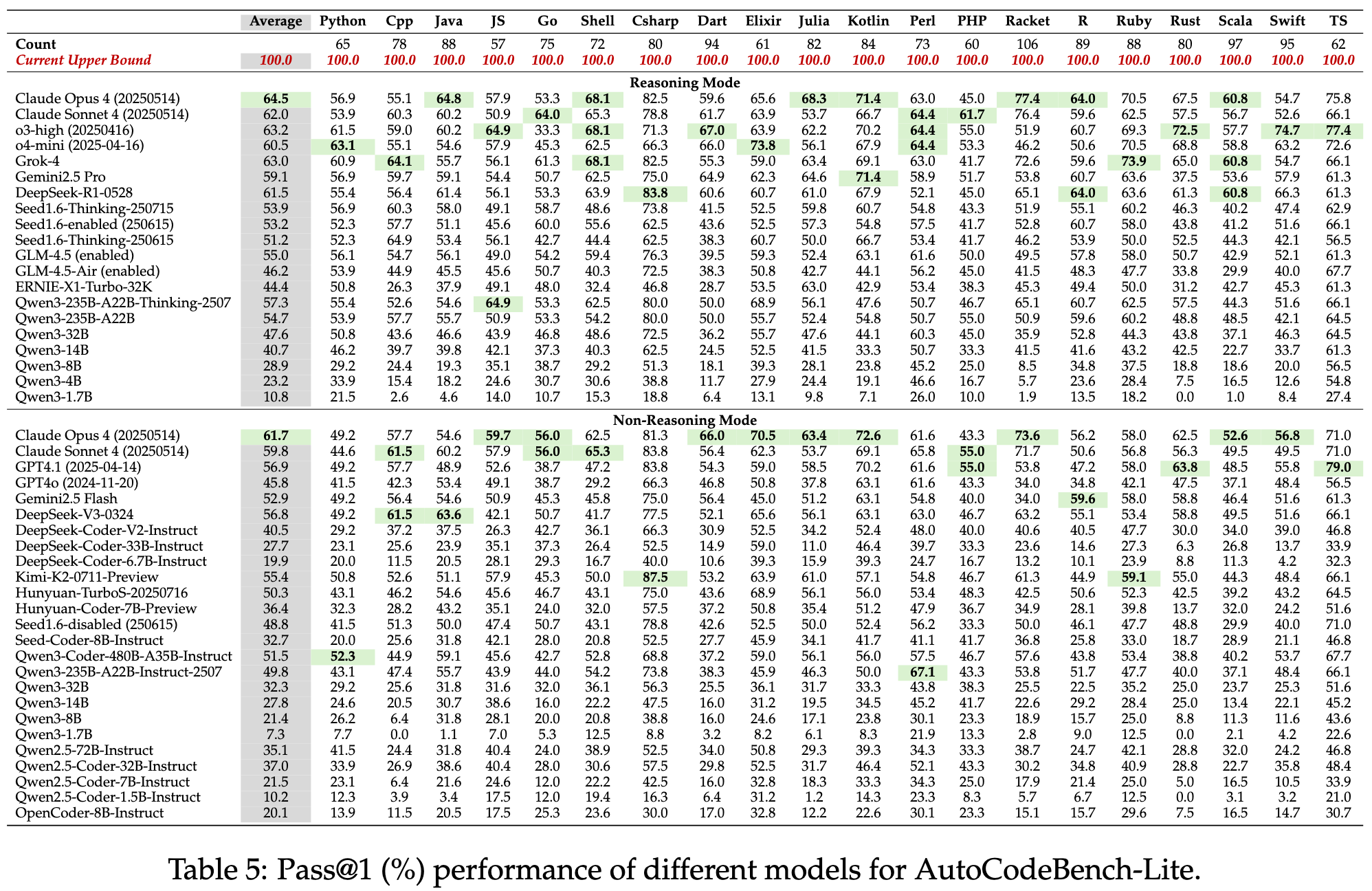

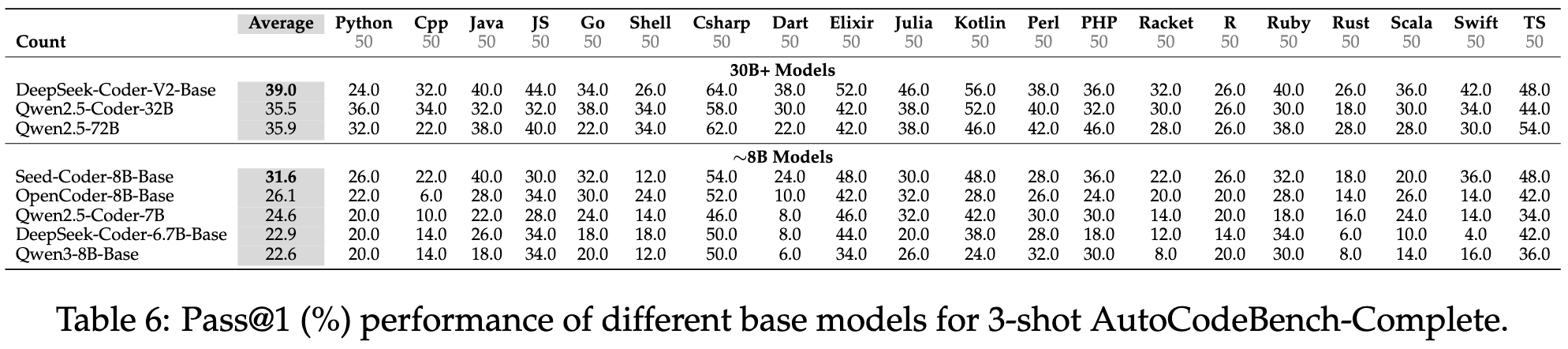

Experimental Results

Further Analysis

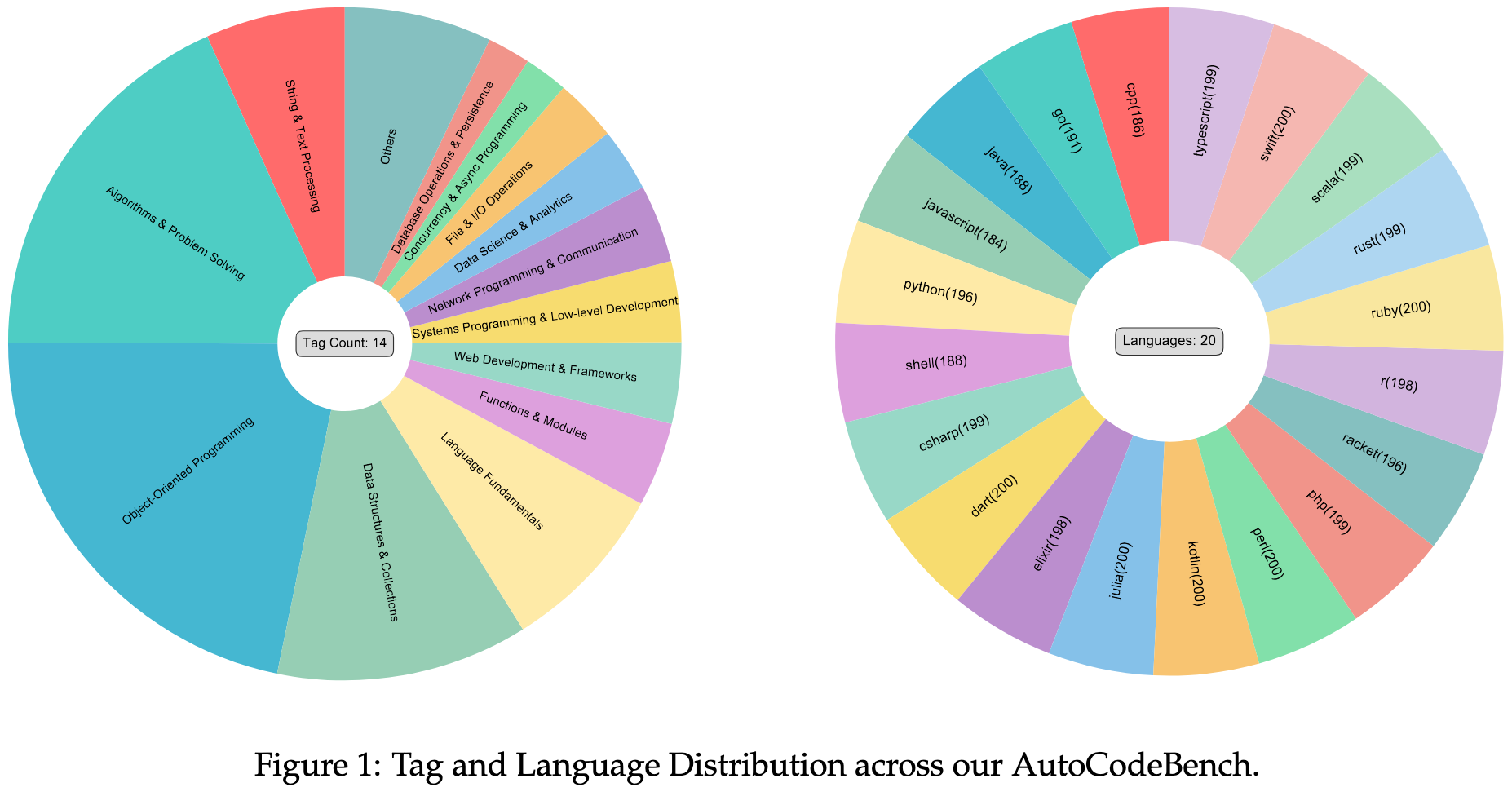

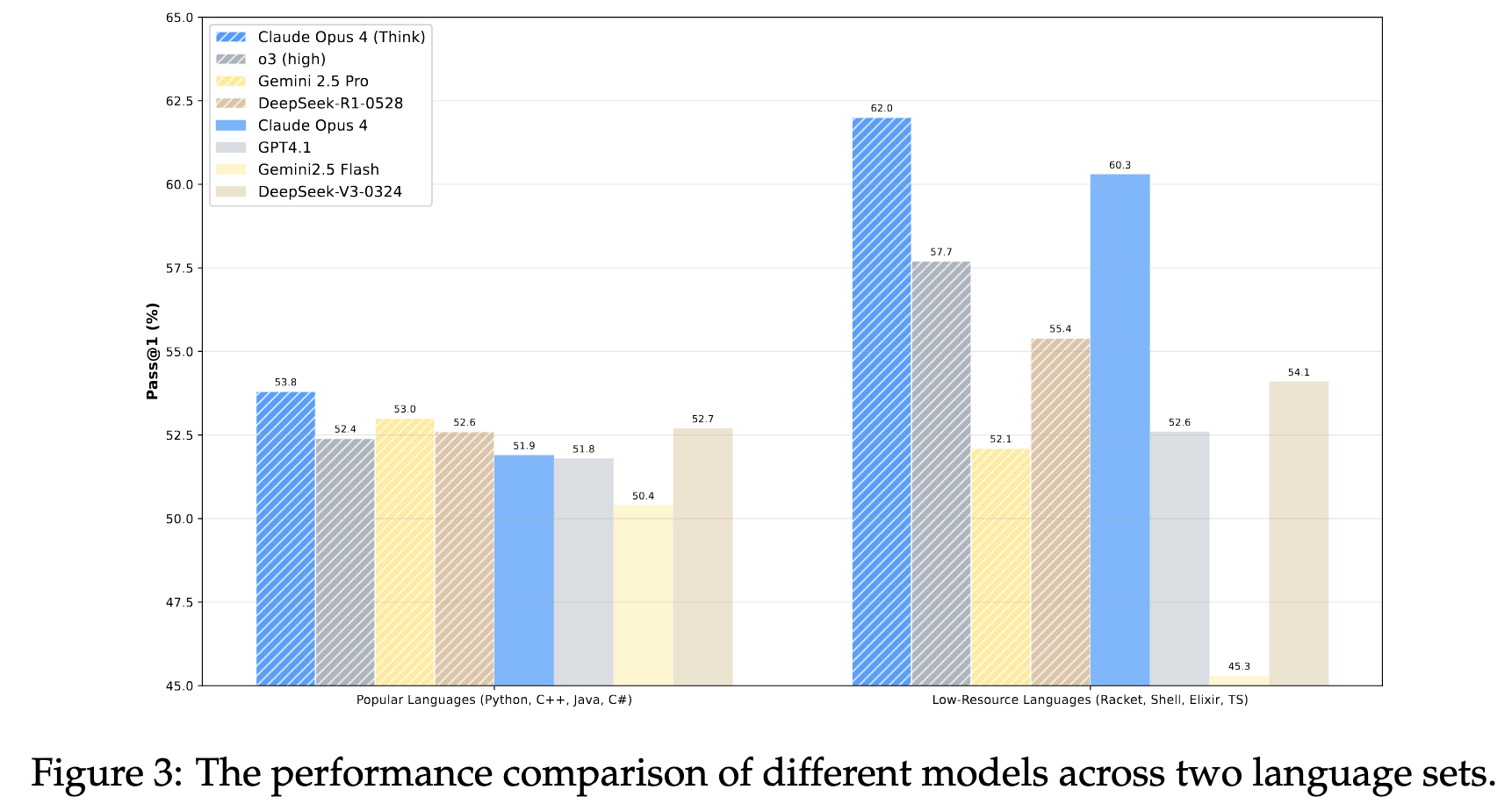

The performance difference between various models is small for popular languages, but large for low-resource languages.

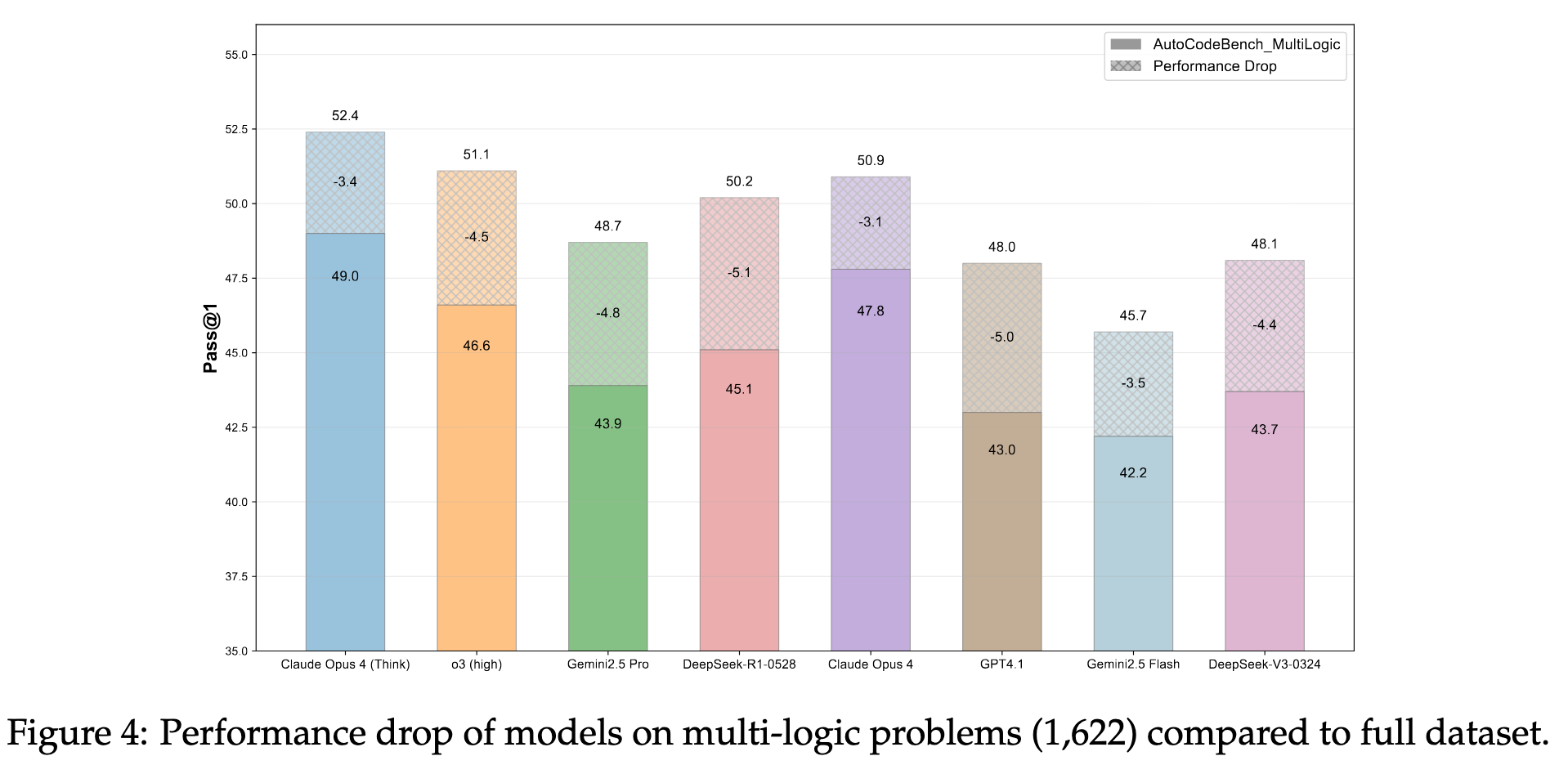

The performance of LLMs declines when faced with multi-logic programming problems.

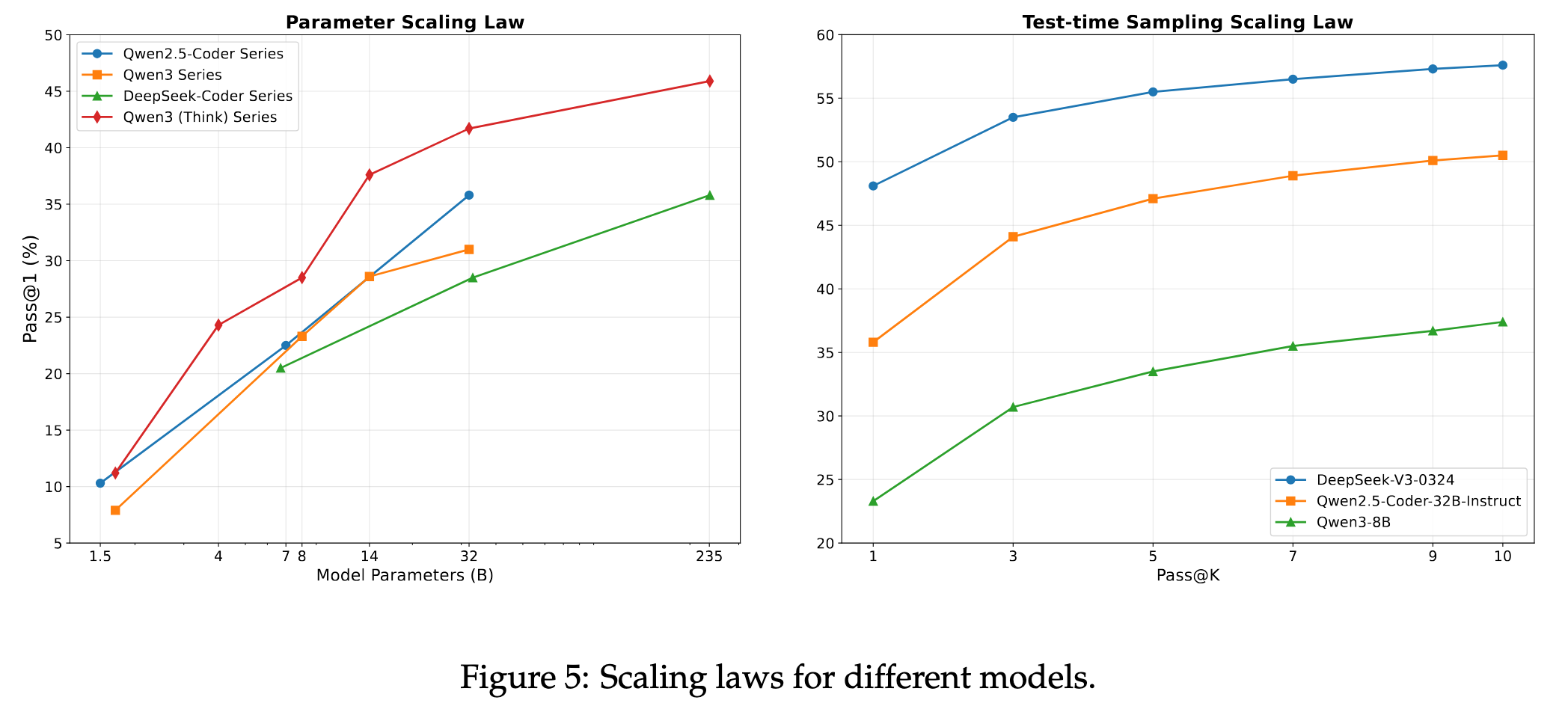

LLMs exhibit parameter and test-time sampling scaling law on AutoCodeBench.

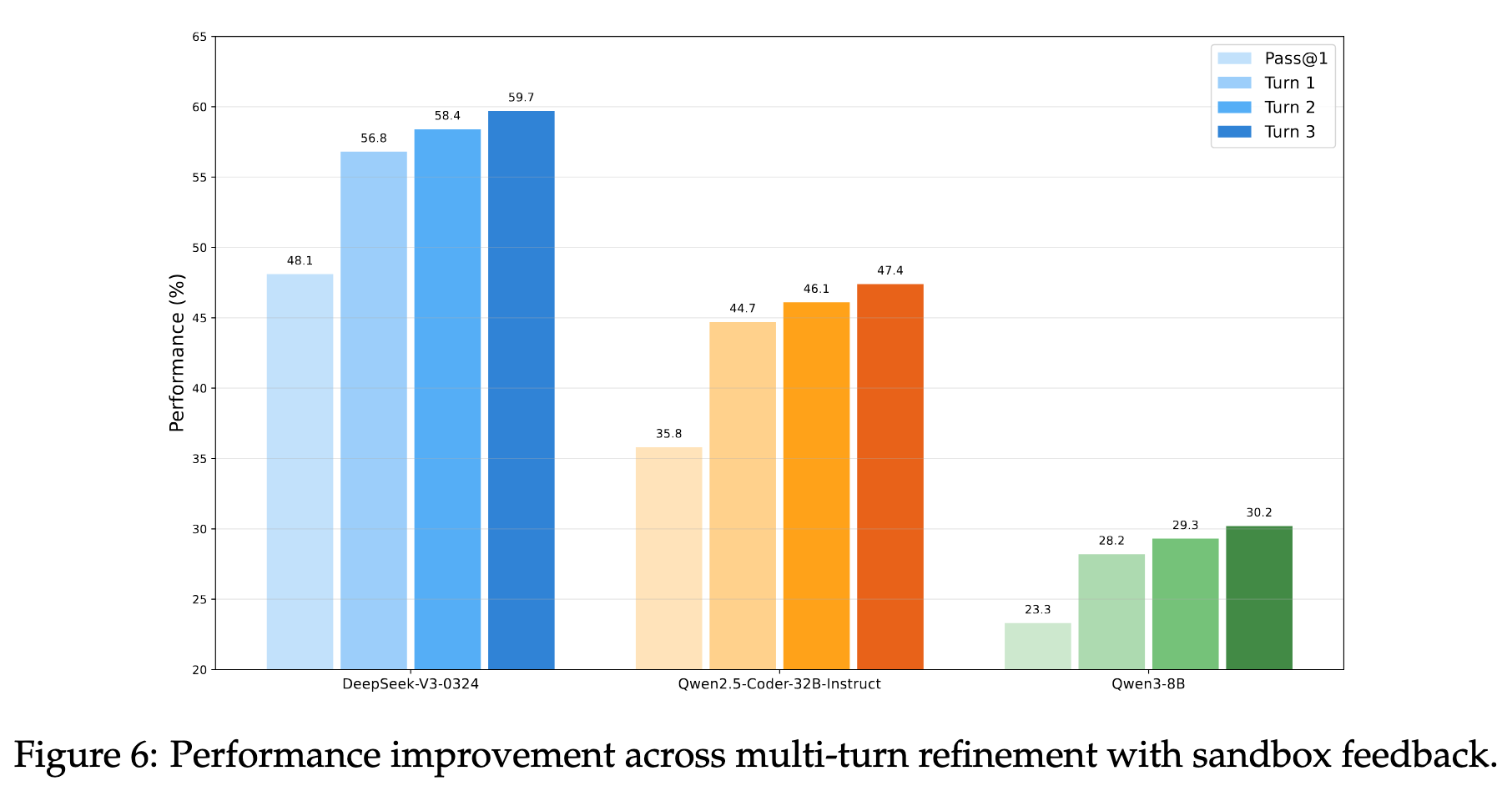

The feedback provided by our multilingual sandbox can guide the model to refine its code.